Algorithm for Gesture Control using the A121 radar sensor

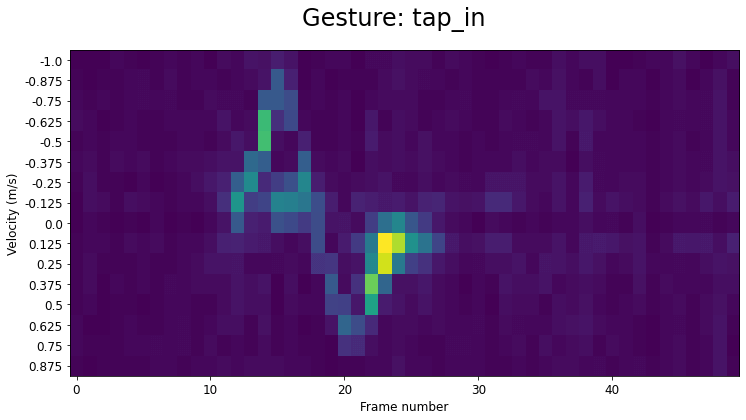

Acconeer’s A121 sensor is very good at measuring the distance to and velocity of objects. In this example, the sensor is used to recognize four types of gestures made with a finger or a hand: tap in, tap out, double tap in and wiggle. You can connect functions to these four movements and implement gesture control in your devices, such as headphones and speakers. On this page you will find everything you need to set it up.

Software

This tutorial consists of a first part where a gesture classification model is defined and trained, followed by a second part where the model is deployed to classify gestures in real time. The former is implemented in a Jupyter notebook and the second part as a python script. If you have not already the necessary setup for executing Python scripts and Jupyter notebooks, you can follow these steps to run them in VS Code:

- Download and install Python

- Install VS Code and set it up for python by following the tutorial

- Follow the guide for how to run Jupyter notebooks in VSC

All the required scripts and pre-recorded data can be downloaded from Acconeer’s Innovation Lab GitHub.

The scripts take advantage of the rich libraries implemented as part of Acconeer’s Exploration Tool. Follow the instructions found here to learn more about the tool and information on how to install it on your computer:

GESTURE CONTROL

Example use case

Try it on your own and get in touch

If you try this, or work on something else, we’d love to hear about your project! Please get in touch with us on innovation@acconeer.com.